How BPE works - the tokenization algorithm used by large language models

A walkthrough of BPE, with a worked example and Python implementations.

Byte pair encoding (BPE) is a tokenization algorithm used by large language models such as GPT, LLaMA, RoBERTa, etc. It’s not the only tokenization algorithm, but many popular models of the current LLM generation use it.

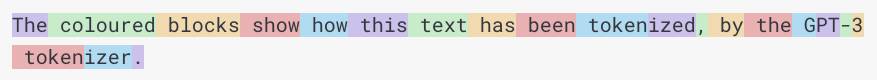

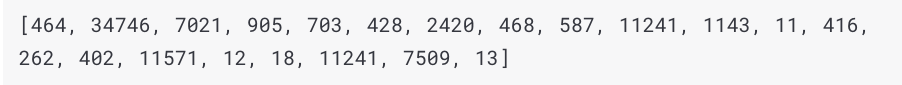

The following screenshots from platform.openai.com/tokenizer illustrate the result of running GPT-3’s BPE tokenizer on some text (i.e. a string of characters).

Training a BPE tokenizer

The algorithm for training a BPE tokenizer is:

- Start off with initial set of tokens (e.g. single characters for these examples, but we could treat the text as a stream of bytes and use single bytes as our initial set of tokens).

- Use this initial set of tokens to tokenize your text.

- Step through and count how many times each pair of tokens appears, (a pair is when two tokens are next to each other in the text).

- Take the pair of tokens that appeared the most, combine them, and add this as a new token.

- Repeat this process a number of times.

The following example shows this process.

Worked example

text = "aa abc abc"

# Iteration 1

tokens = [" ", "a", "b", "c"]

tokenized_text = ["a", "a", " ", "a", "b", "c", " ", "a", "b", "c"]

counts = [

("a", "a"): 1,

("a", " "): 1,

(" ", "a"): None, # <- skip (" ", <tok>) to avoid counting across words

("a", "b"): 2, # <- select max of counts to merge (if multiple max vals, take the first one)

("b", "c"): 2,

("c", " "); 1,

]

new_token = "ab"

# Iteration 2

tokens = [" ", "a", "b", "c", "ab"]

tokenized_text = ["a", "a", " ", "ab", "c", " ", "ab", "c"]

counts = [

("a", "a"): 1,

("a", " "): 1,

("ab", "c"): 2,

("c", " "): 1,

]

new_token = "abc"

# Iteration 3

tokens = [" ", "a", "b", "c", "ab", "abc"]

tokenized_text = ["a", "a", " ", "abc", " ", "abc"]

counts = [

("a", "a"): 1,

("a", " "): 1,

("abc", " "): 1

]

new_token = "aa"

# Iteration 4

tokens = [" ", "a", "b", "c", "ab", "abc", "aa"]

tokenized_text = ["aa", " ", "abc", " ", "abc"]

counts = [

("aa", " "): 1,

("abc", " "): 1

]

new_token = "aa"

# We'll stop here

(In practice we are likely to stop if there are no counts above 1.)

Python implementation, from Sennrich et al.

Here is an implementation of the BPE algorithm, adapted from “Algorithm 1” in Sennrich et al.. It differs from the example above in that it splits and counts the words first; and then uses spaces to distinguish tokens, modifying the strings as it iterates; but both approaches end up with the same result. (In practice, when dealing with large corpuses of text, a streaming approach, more similar to the worked example above would be taken.)

import re

import collections

words_and_counts = {

"a a </w>": 1,

"a b c </w>": 1,

"a b c": 1,

}

num_merges = 4

print(f"Words and counts: {words_and_counts}\n")

for i in range(num_merges):

# Count the frequency of each pair of tokens

counts = collections.defaultdict(int)

for word, freq in words_and_counts.items():

symbols = word.split()

for j in range(len(symbols) - 1):

counts[symbols[j], symbols[j + 1]] += freq

best = max(counts, key=counts.get)

# Merge the pair of tokens with the highest frequency

merged_vocab = {}

bigram = re.escape(" ".join(best))

p = re.compile(r"(?<!\S)" + bigram + r"(?!\S)")

for word in words_and_counts:

w_out = p.sub("".join(best), word)

merged_vocab[w_out] = words_and_counts[word]

words_and_counts = merged_vocab

print(f"Iteration: {i + 1}")

print(f"New token: {best} -> {''.join(best)}")

print(f"Words and counts: {words_and_counts}")

print()

Output:

Words and counts: {'a a </w>': 1, 'a b c </w>': 1, 'a b c': 1}

Iteration: 1

New token: ('a', 'b') -> ab

Words and counts: {'a a </w>': 1, 'ab c </w>': 1, 'ab c': 1}

Iteration: 2

New token: ('ab', 'c') -> abc

Words and counts: {'a a </w>': 1, 'abc </w>': 1, 'abc': 1}

Iteration: 3

New token: ('a', 'a') -> aa

Words and counts: {'aa </w>': 1, 'abc </w>': 1, 'abc': 1}

Iteration: 4

New token: ('aa', '</w>') -> aa</w>

Words and counts: {'aa</w>': 1, 'abc </w>': 1, 'abc': 1}

Streaming implementation, using a trie

Here’s a basic “streaming” implementation of BPE I’ve written (unlike the above it looks over the text without modifying it). It uses a trie, to work out which token to use for an expanding substring (to use the longest possible token for a string, rather than the first match, e.g. “aa” should be tokenized to “aa”, not “a” and “a”).

from collections import defaultdict

# Streaming version

text = "aa abc abc"

trie = {"a": {}, "b": {}, "c": {}}

for _ in range(4):

pair_counts = defaultdict(int)

prev_token = None

i = 0

j = 0

node = trie

while i < len(text) and j < len(text):

j += 1

try:

node = node[text[j - 1]]

node[text[j]] # test if next step in trie

except (KeyError, IndexError):

if prev_token and prev_token[-1] != " ":

pair_counts[(prev_token, text[i:j])] += 1

prev_token = text[i:j]

node = trie

i = j

merge = max(pair_counts, key=pair_counts.get)

new_token = "".join(merge)

print(f"Merging {merge} into `{new_token}`")

# Add new token to trie

node = trie

for char in new_token:

try:

node = node[char]

except KeyError:

node[char] = {}

node = node[char]

Merging ('a', 'b') into `ab`

Merging ('ab', 'c') into `abc`

Merging ('a', 'a') into `aa`

Merging ('aa', ' ') into `aa `

Further comments

We would also number the tokens we end up with, in order to pass a list of integers to our model.

E.g.

tokens = [" ", "a", "b", "c", "ab", "abc", "aa"]

token_ids = [ 0, 1, 2, 3, 4, 5, 6]

tokenized_text = ["aa", " ", "abc", " ", "abc"]

encoded_text = [ 6, 0, 5, 0, 5]

Note that there are various different implementation choices / behaviours in the wild.

Another commonly used tokenization algorithm is WordPiece, which has some similarities to BPE, but rather than simply using counts, a divisor is included, and its initialisation and merging rules are slightly different.

Finally, recent work, such as Megabyte, 2023, removes tokenization from transformer models entirely, so it will be interesting to see whether tokenizer-free approaches become widely adopted or not.

References:

- Sennrich, Rico, Barry Haddow, and Alexandra Birch. “Neural machine translation of rare words with subword units.” arXiv preprint arXiv:1508.07909 (2015). https://arxiv.org/abs/1508.07909

- Hugging Face NLP Course, Byte-Pair Encoding tokenization, https://huggingface.co/learn/nlp-course/chapter6/5?fw=pt

- https://simonwillison.net/2023/Jun/8/gpt-tokenizers/

- Gage, Philip. “A new algorithm for data compression.” C Users Journal 12.2 (1994): 23-38. http://www.pennelynn.com/Documents/CUJ/HTML/94HTML/19940045.HTM

- https://en.wikipedia.org/wiki/Byte_pair_encoding

- https://en.wikipedia.org/wiki/Trie

- https://github.com/google/sentencepiece

- https://github.com/huggingface/tokenizers

- https://github.com/openai/tiktoken

- Yu, Lili, et al. “Megabyte: Predicting million-byte sequences with multiscale transformers.” arXiv preprint arXiv:2305.07185 (2023). https://arxiv.org/abs/2305.07185