Visual content search over music videos - demo

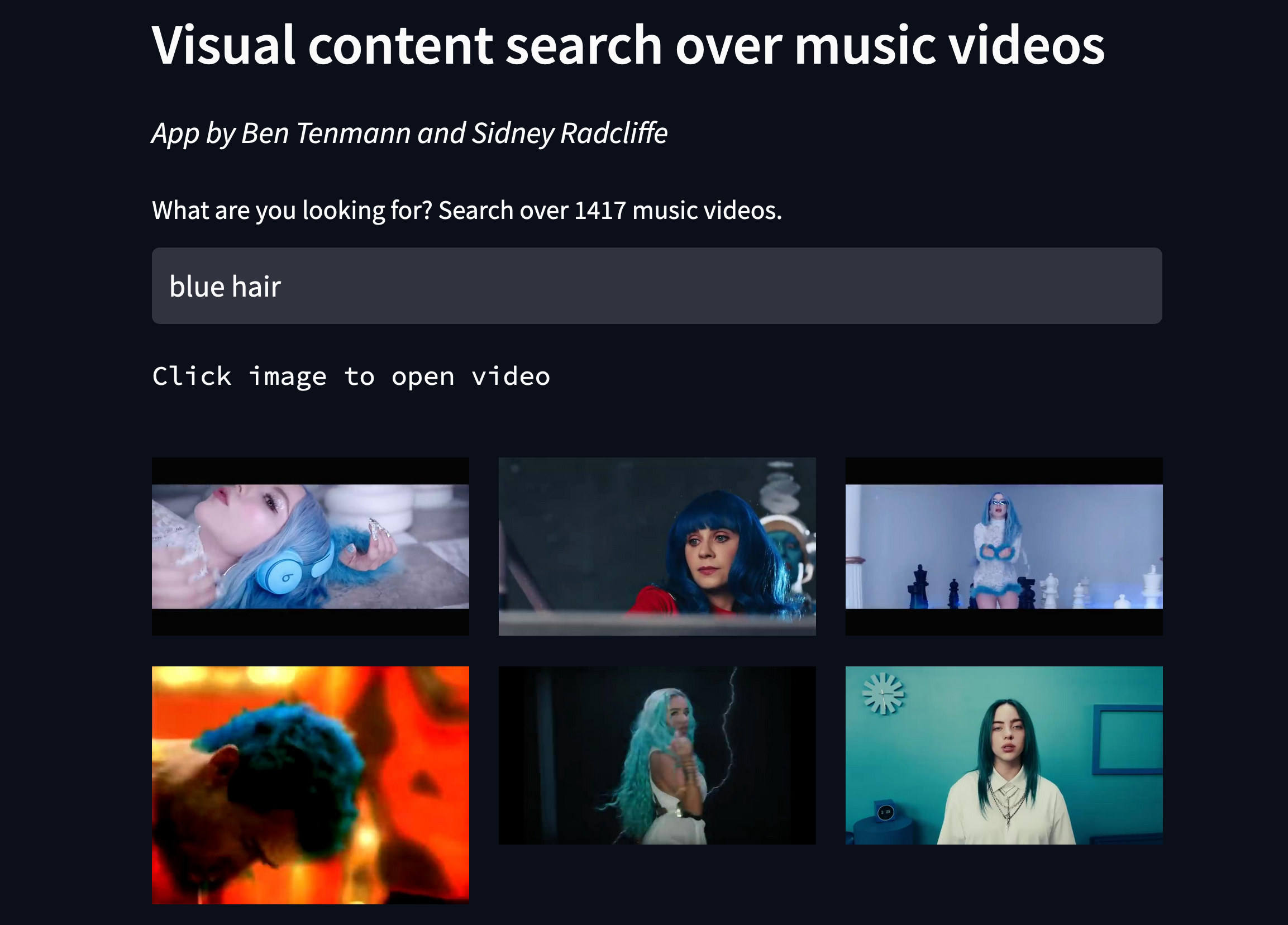

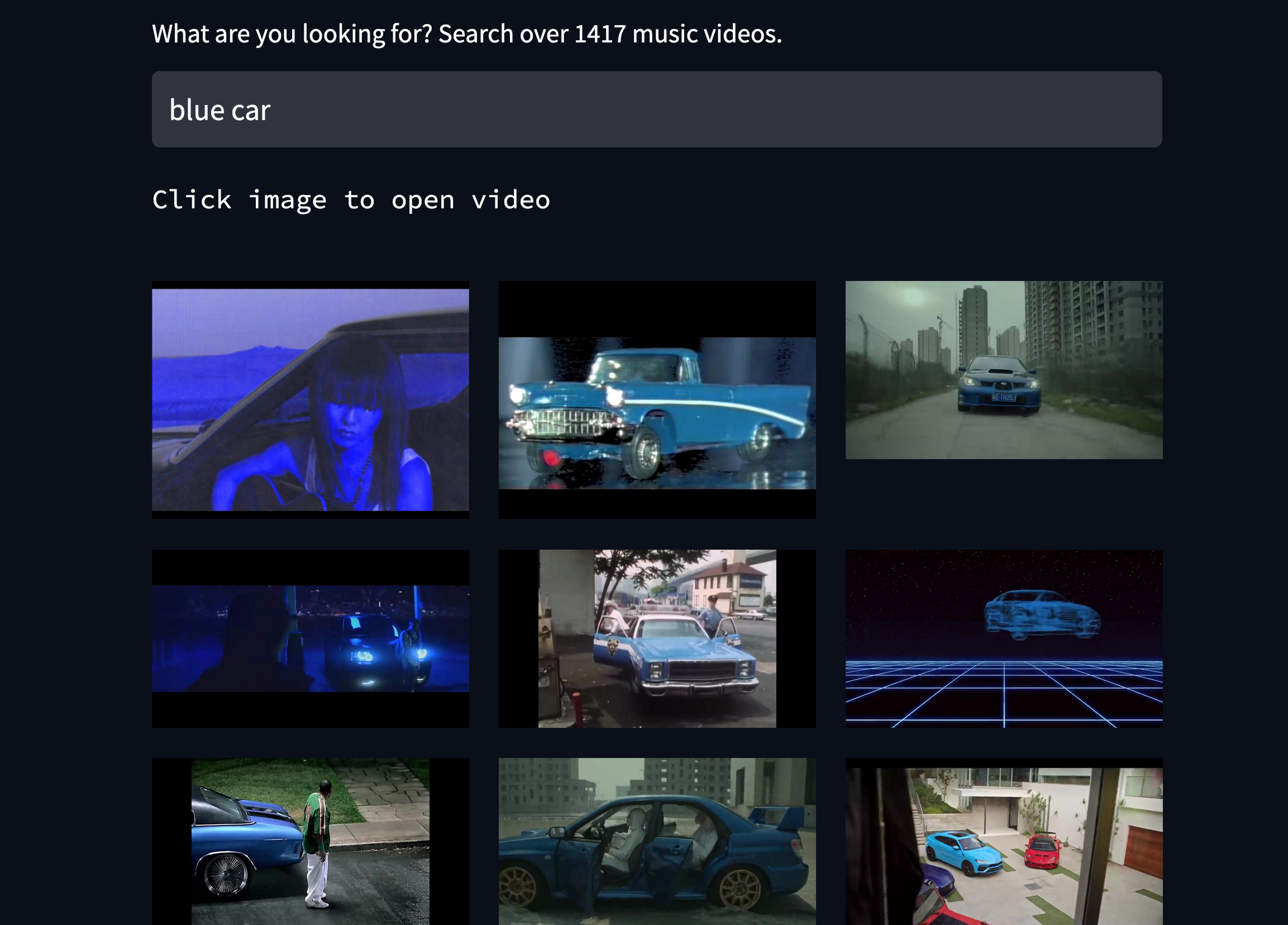

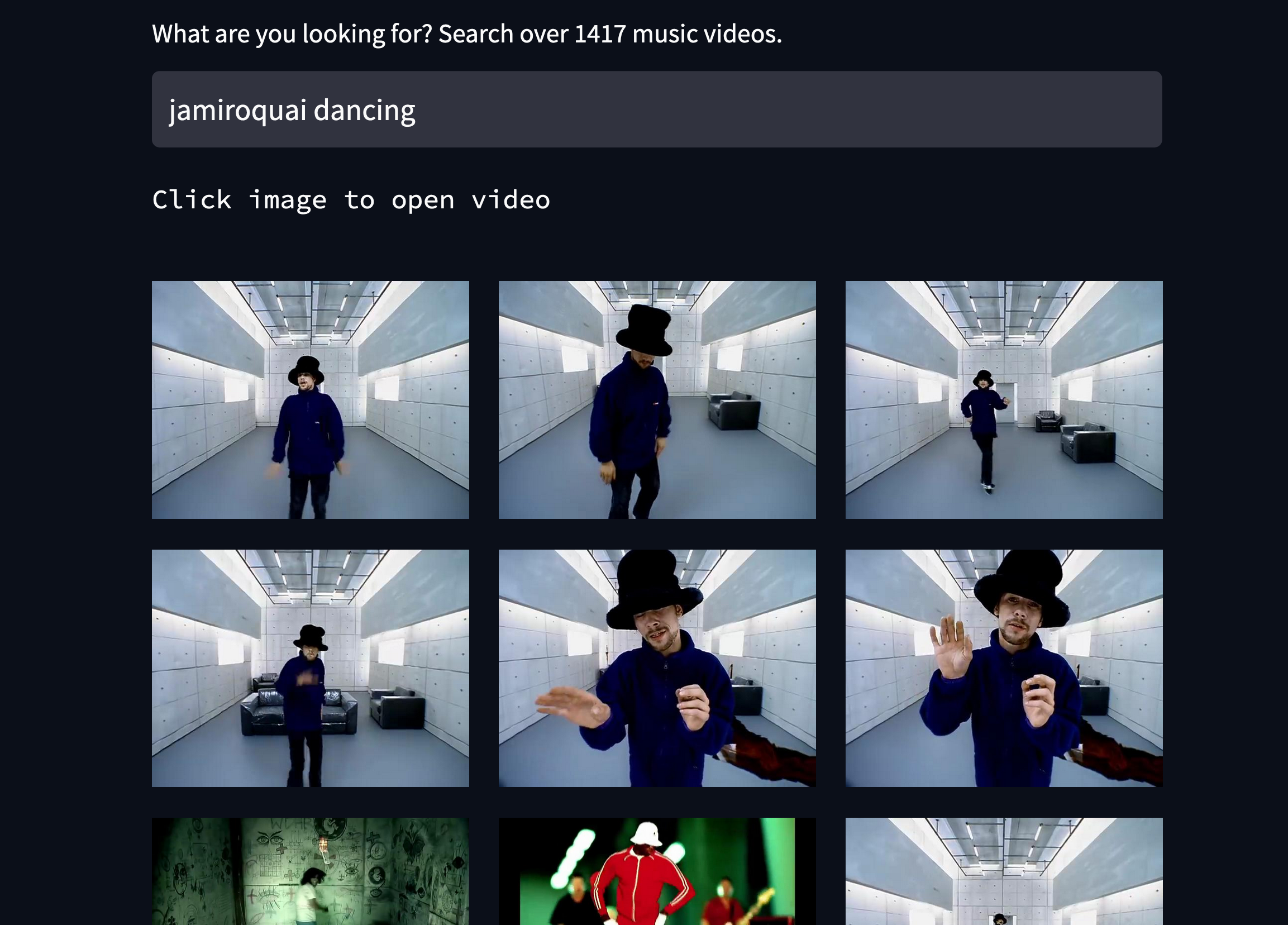

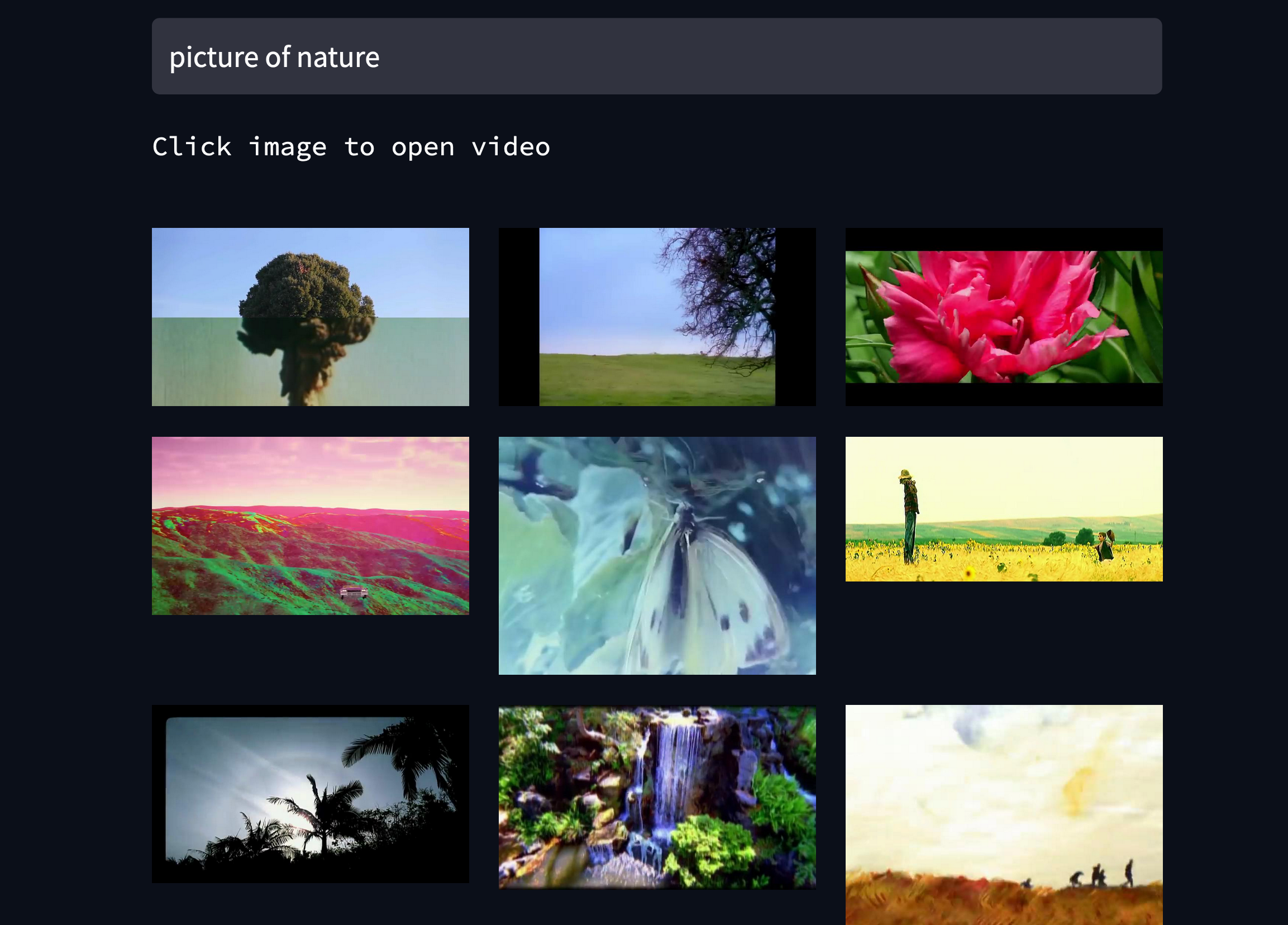

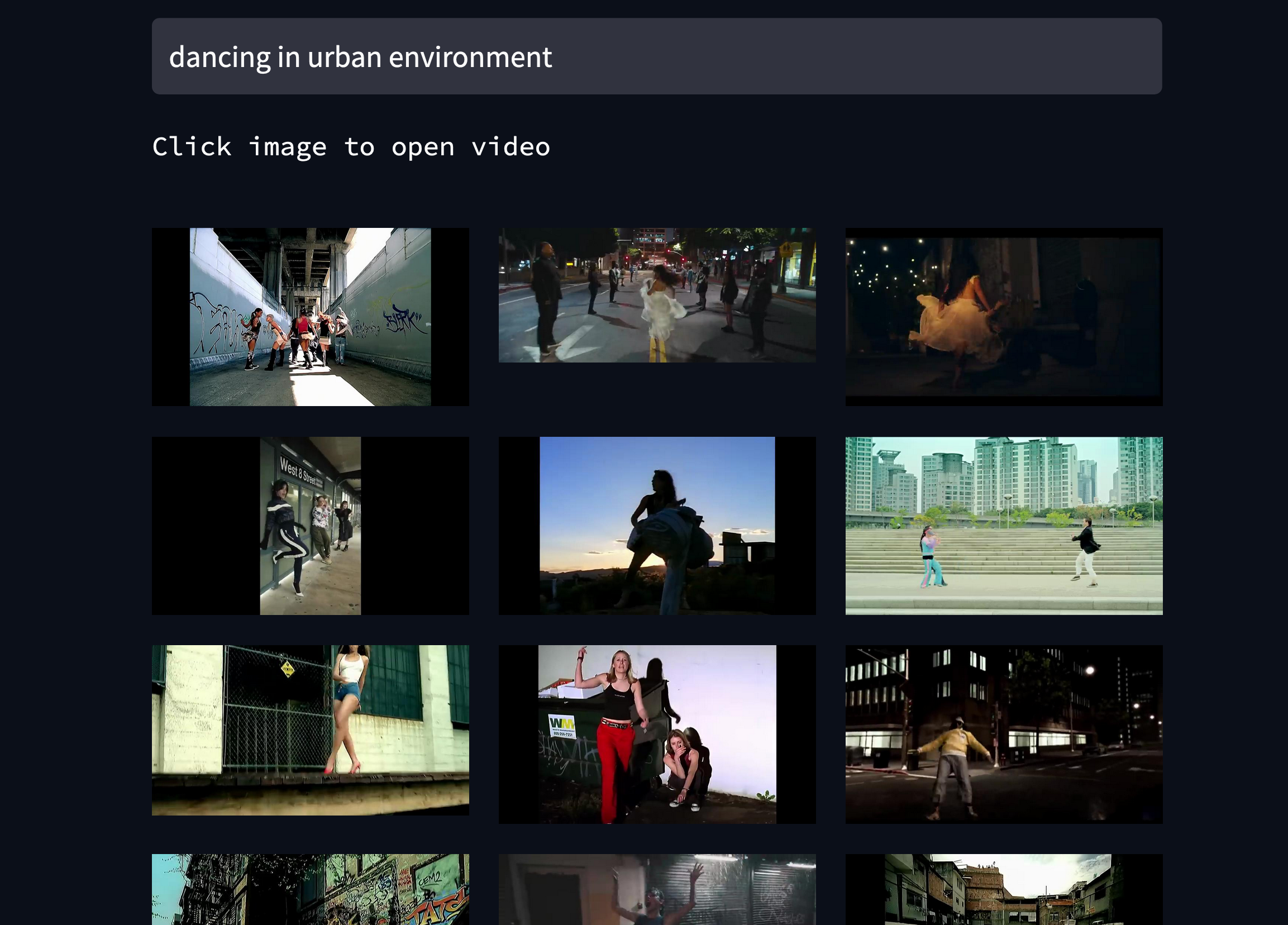

For our demo, we took ~1400 music videos and turned the frames into embeddings, making it possible to search over the visual content of the videos. I wrote a blog post on how it works here. You can try it out here. The source code is here. Here are some examples:

I wrote a more detailed post about how to implement this kind of thing here. Ben wrote about the demo here.

There are a few improvements we could make to this:

- increase the number of videos, (means there’s more chance you will find what you are looking for)

- remove very similar frames

- group frames by video source

- [your suggestions here…]

(Looking forward to seeing video services implementing this!…)