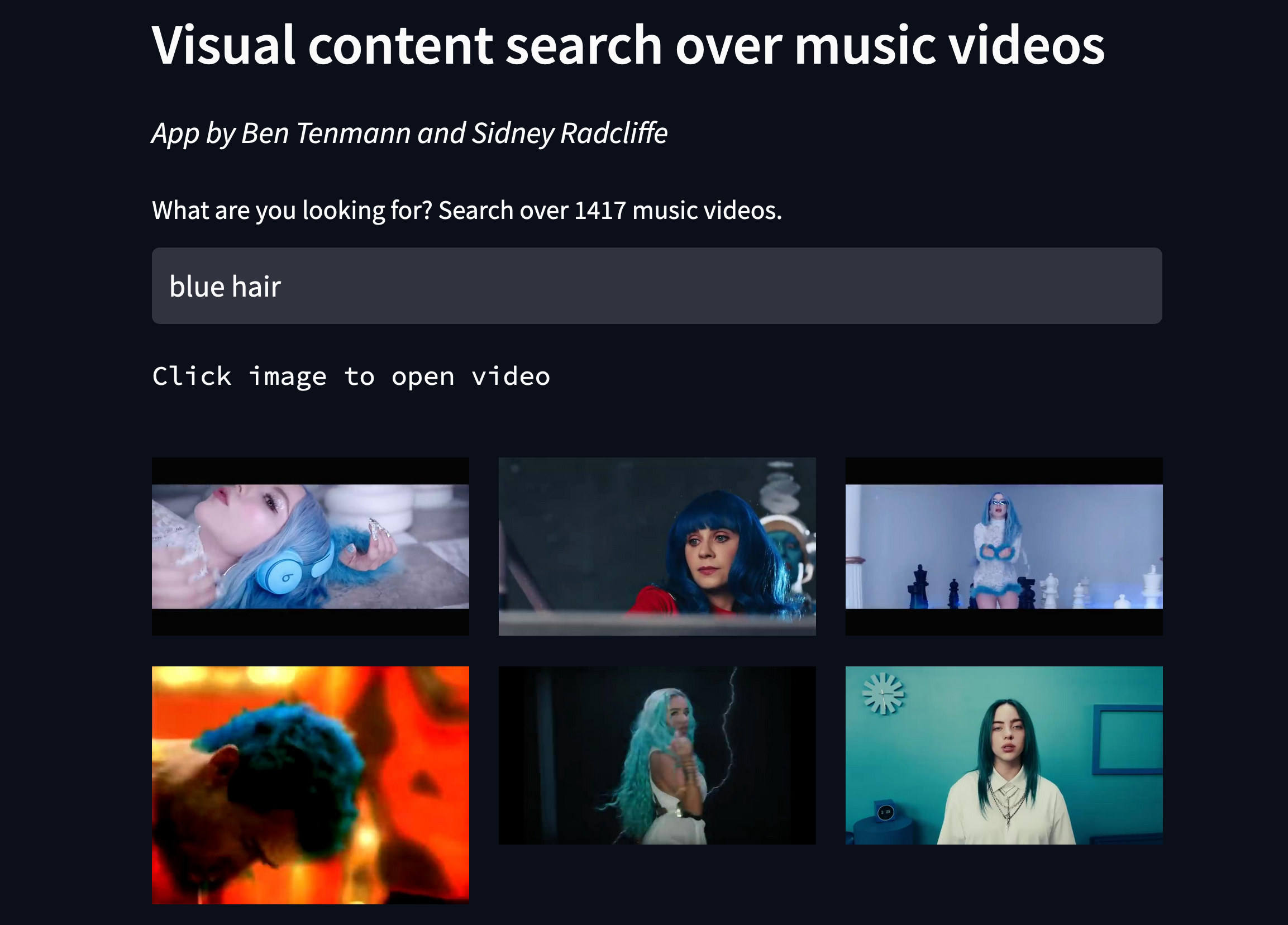

Visual content search over music videos - demo

This morning I came across a great introduction to embeddings, Embeddings: What they are and why they matter, and it reminded me that I never got around to writing up a demo a friend and I made using this technology… As is mentioned in the post, retrieval via embeddings doesn’t just apply to text, but to pretty much any content you can train neural networks on, including images.

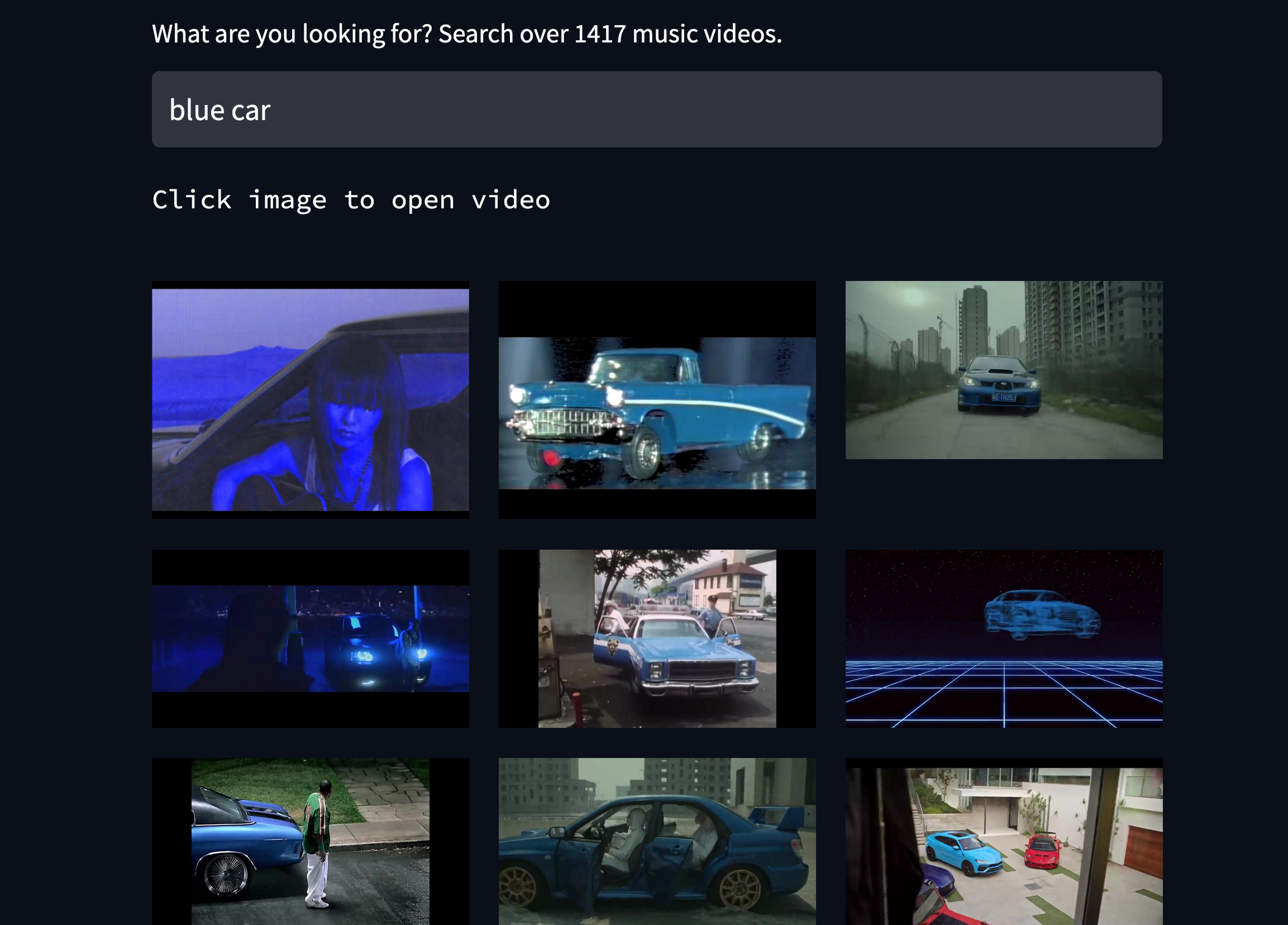

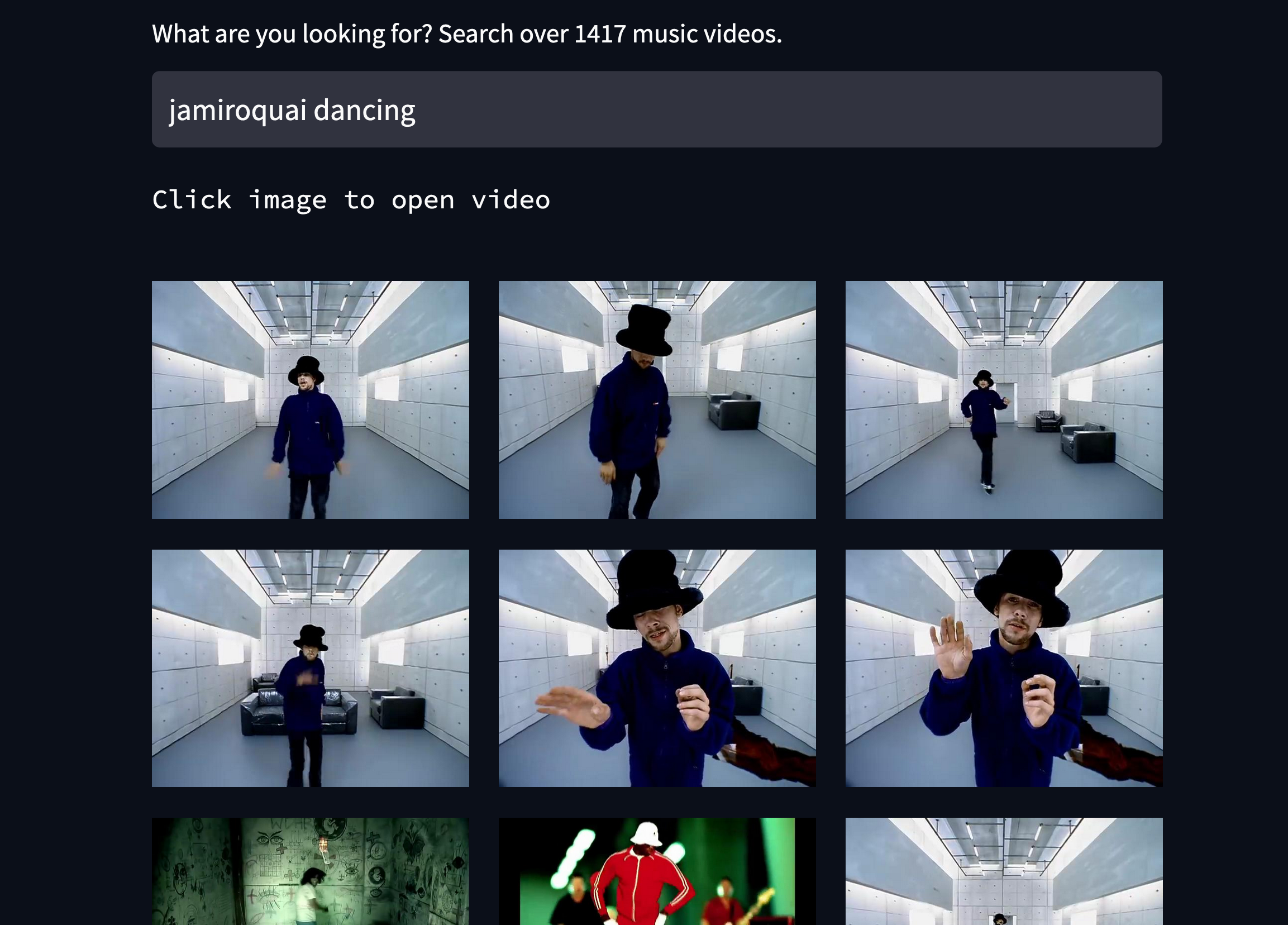

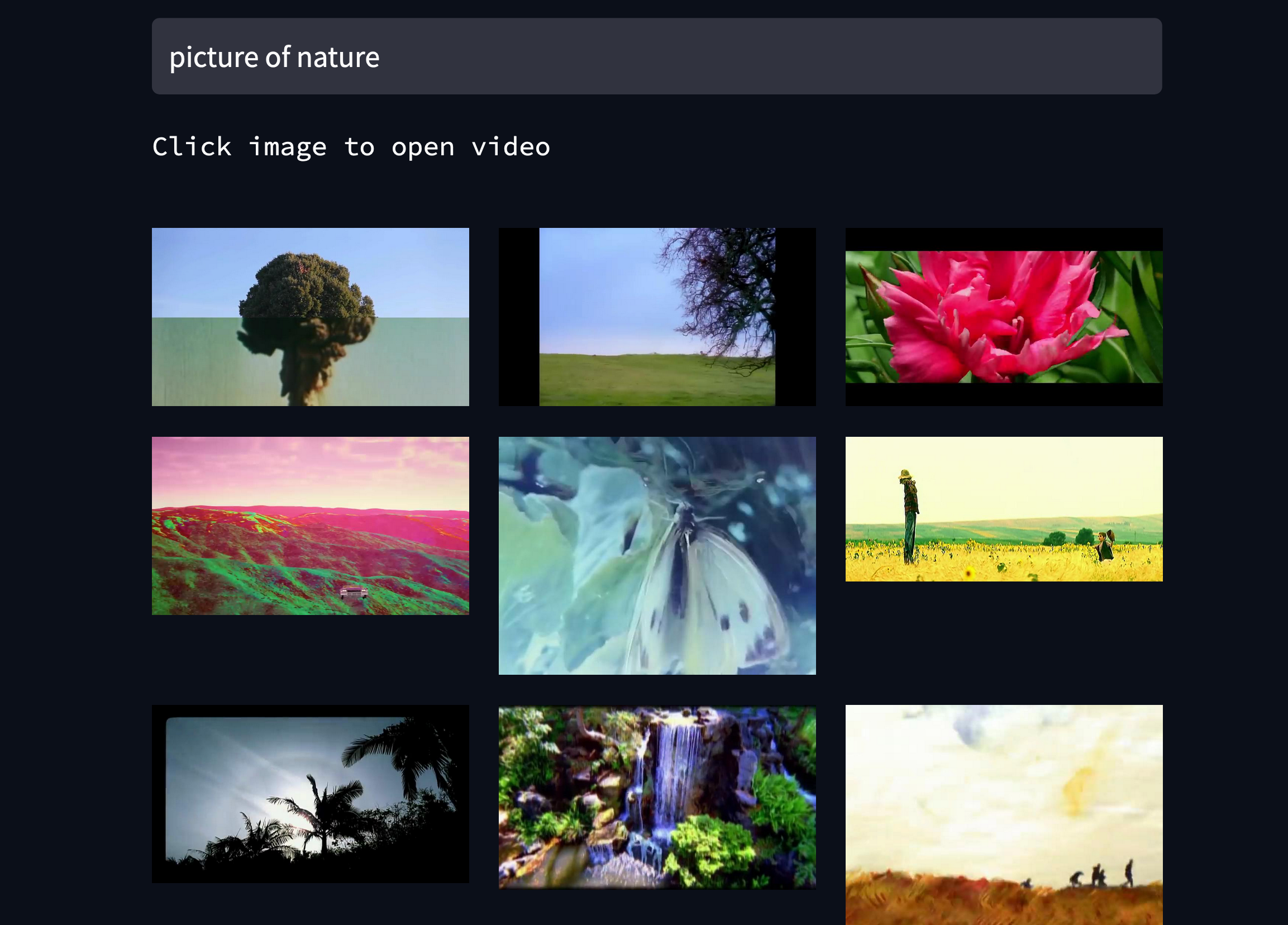

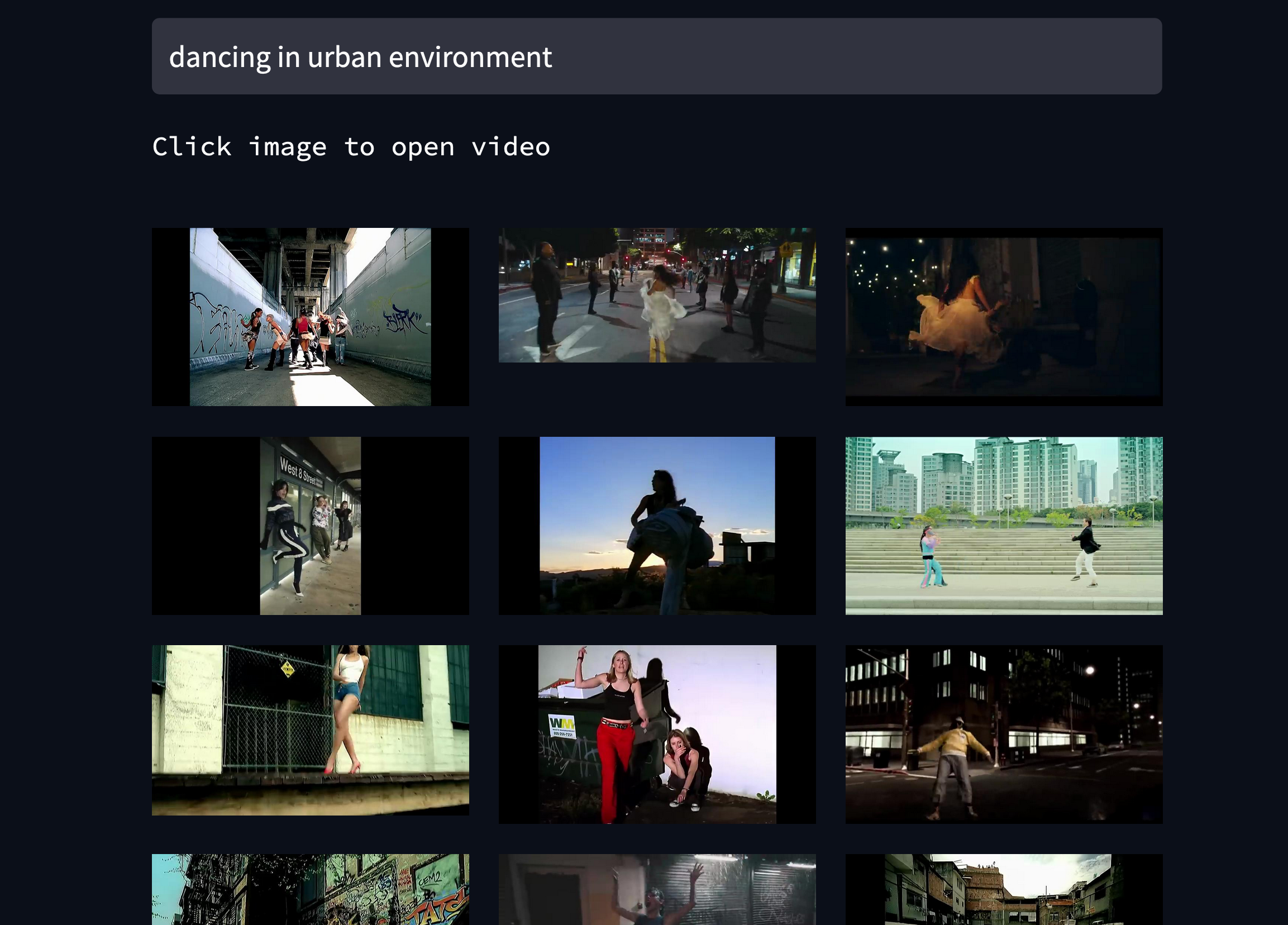

For our demo, we took ~1400 music videos and turned the frames into embeddings, making it possible to search over the visual content of the videos. You can try it out here. The source code is here. Here are some examples:

I wrote a more detailed post about how to implement this kind of thing here. Ben wrote about the demo here.

There are a few improvements we could make to this:

- increase the number of videos, (means there’s more chance you will find what you are looking for)

- remove very similar frames

- group frames by video source

- [your suggestions here…]

(Looking forward to seeing video services implementing this!…)